This year, Open Source Summit North America was held as an umbrella conference, composed of a collection of 14 events covering the most important technologies, topics, and issues affecting open source today in June. There were a total of 2,771 attendees with 1,286 of those attending in person in Austin, from 1,041 organizations across 68 countries around the globe. The event attracted a diversified mix of open source community members from across the ecosystem. 54% of attendees were in technical positions, and developers comprised more than a quarter of attendees. You can read the post-event report here. You can also view all of the event playlists on the Linux Foundation Youtube Channel.

The ELISA Project was featured in several sessions and represented by ambassadors and community members at the conference. If you missed these presentations, you can watch the videos below:

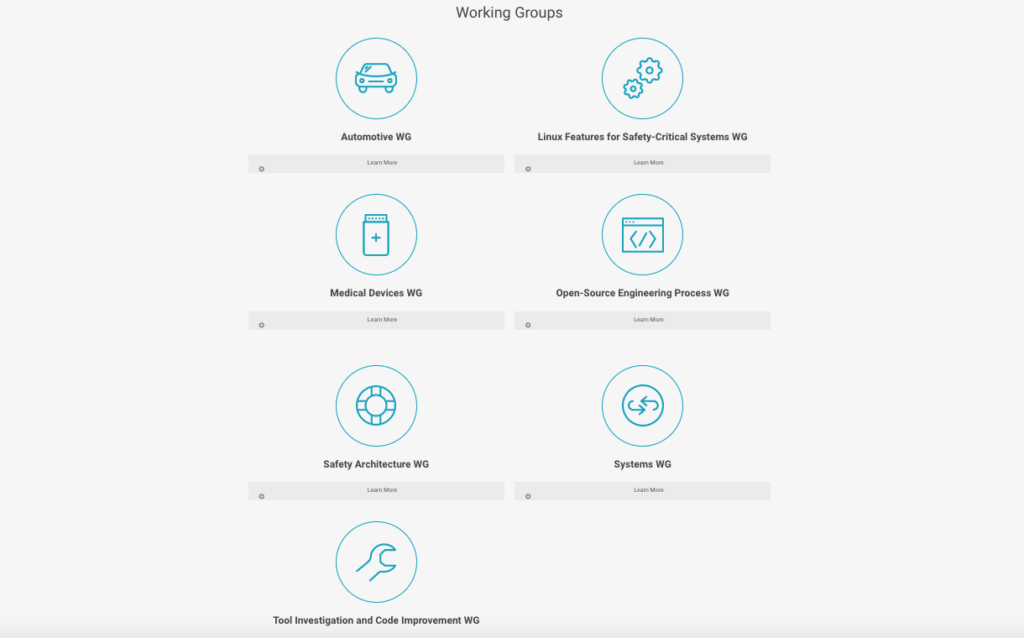

Enabling Linux in Safety Applications (panel discussion) – Gabriele Paoloni, Red Hat (ELISA board chair) Kate Stewart, Linux Foundation (ELISA Executive Director) Paul Albertella, CodeThink (Open Source Engineering Process) Elana Copperman, Intel (Linux Features) Philipp Ahmann, Bosch GmbH (Automotive) Milan Lakhani, Codethink (Medical Devices)

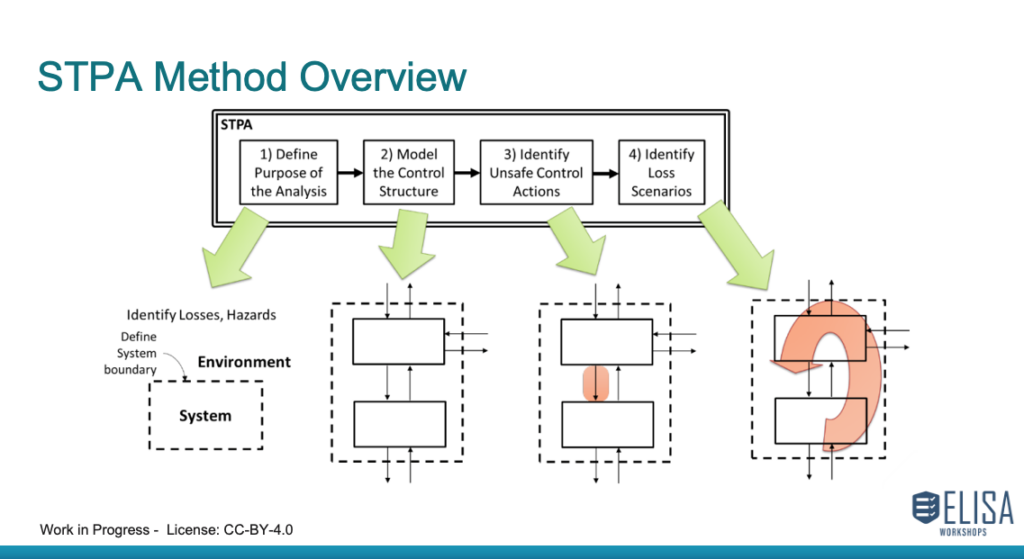

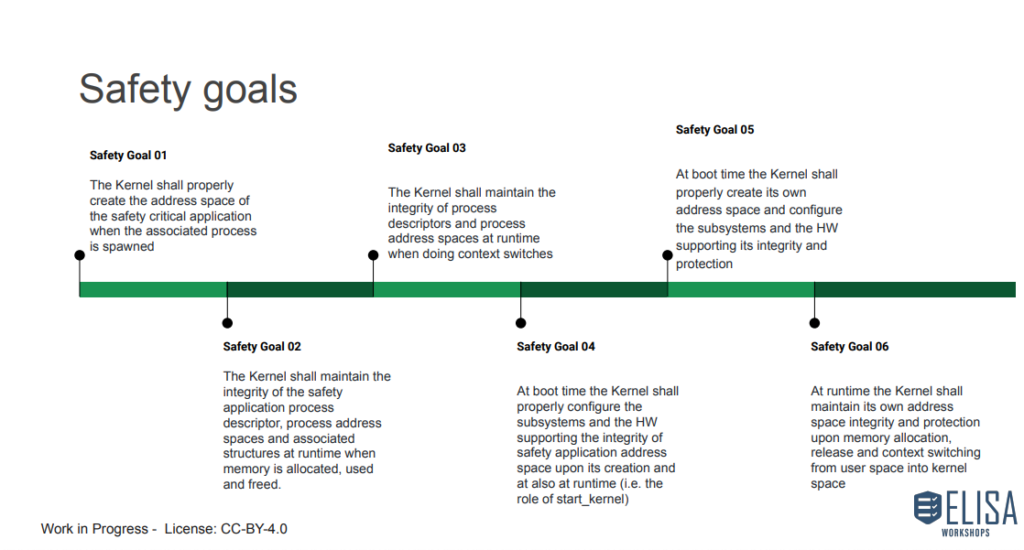

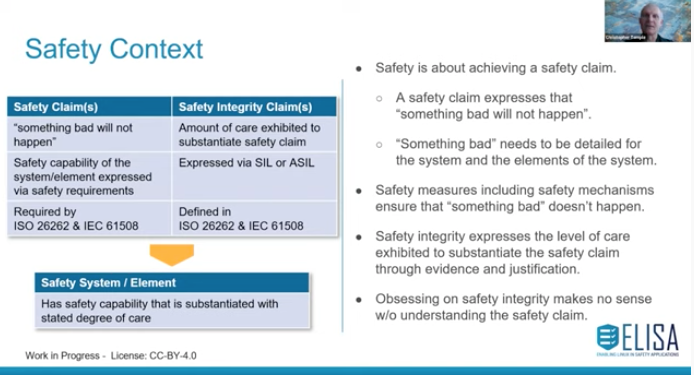

Meeting business and safety objectives while building safety critical applications is a huge challenge for any industry, particularly those who have not had previous experience with open source and Linux. ELISA’s charter is to help industries navigate technical and non-technical challenges in order to bring the benefits of open source to safety applications and help organizations provide the rigor needed for certification. This panel features ELISA working group leads who will share their vision of making Linux a prominent player for FuSa applications in several industries. Join us to learn more about the project and how you can contribute to the community’s overall success.

Finding the Path from Embedded to Edge using Product Lines – Steffen Evers, Bosch.IO & Philipp Ahmann, Robert Bosch GmBH

Linux is used for many embedded device classes today. However, it is increasingly desirable to connect these devices with each other and with the cloud. Embedded container technology can be used to make this easier by merging server/cloud and embedded technologies. However, it also leads to more challenges e.g. in respect to security, safety, traceability, and SBOMs. Using Linux across multiple device classes and product lines, and adding cloud technology, causes the complexity and efforts to explode.

In this talk, we describe how Bosch, and others, use embedded containers and “reference systems” to avoid redundant work and get a large number of embedded projects under control.

A reference system is an adjustable compilation of tools along with a pre-configured bundle of packages for a common use case and defined set of devices. This reuse significantly reduces development and maintenance costs, and speeds up the time to market. In this way, reference systems can form the base for your product lines.

Bosch uses the in-house Debian-based embedded distribution “Apertis” as the basis for several reference systems, e.g. for automotive infotainment systems. In doing so we push as many efforts as possible from individual projects into Apertis, as the meta-layer. Thereby, the users can focus more on the actual functionality and applications. e.g. one issue that we have addressed in the context of software management is the handling of GPLv3 in embedded devices. Another topic has been mainline support for kernel drivers.

BOF: SBOMs for Embedded Systems: What’s Working? What’s Not? – Kate Stewart

With the recent focus on improving Cybersecurity in IoT & Embedded, the expectation that a Software Bill of Materials (SBOM) can be produced, is becoming the norm. Having a clear understanding of the software running on an embedded system, especially in safety critical applications, like medical devices, energy infrastructure, etc. has become essential. Regulatory authorities have recognized this and are starting to expect it as a condition for engagement. This BOF will provide an overview of the emerging regulatory landscape, as well as examples of how SBOMs are already being generated today for embedded systems by open source projects such as Zephyr, Yocto and others, followed by a discussion of the gaps folks are seeing in practice, and ways we might tackle them.

Static Partitioning with Xen, LinuxRT, and Zephyr: A Concrete End-to-end Example – Stefano Stabellini, AMD

Static partitioning enables multiple domains to run alongside each other with no interference. They could be running Linux, an RTOS, or another OS, and all of them have direct access to different portions of the SoC. In the last five years, the Xen community introduced several new features to make Xen-based static partitioning possible. Dom0less to start multiple static domains in parallel at boot, and Cache Coloring to minimize cache interference effects are among them. Static inter-domain communications mechanisms were introduced this year, while “ImageBuilder” has been making system-wide configurations easier. An easy-to-use complete solution is within our grasp. This talk will show the progress made on Xen static partitioning. The audience will learn to configure a realistic reference design with multiple partitions: a LinuxRT partition, a Zephyr partition, and a larger Linux partition. The presentation will show how to set up communication channels and direct hardware access for the domains. It will explain how to measure interrupt latency and use cache coloring to zero cache interference effects. The talk will include a live demo of the reference design.

RTLA: Real-time Linux Analysis Toolset – Daniel Bristot De Oliveira, Red Hat

Currently, Real-time Linux is evaluated using a black-box approach. While the black-box method provides an overview of the system, it fails to provide a root cause analysis for unexpected values. Developers have to use kernel trace features to debug these cases, requiring extensive knowledge about the system and fastidious tracing setup and breakdown. Such analysis will be even more impactful after the PREEMPT_RT merge. To support these cases, since version 5.17, the Linux kernel includes a new tool named rtla, which stands for Real-time Linux Analysis. The rtla is a meta-tool that consists of a set of commands that aims to analyze the real-time properties of Linux. Instead of testing Linux as a black box, rtla leverages kernel tracing capabilities to provide precise information about latencies and root causes of unexpected results. In this talk, Daniel will present two tools provided by rtla. The timerlat tool to measure IRQ and thread latency for interrupt-driven applications and the osnoise tool to evaluate the ability of Linux to isolate workload from the interferences from the rest of the system. The presentation includes examples of how to use the tool to find the root cause analysis and collect extra tracing information directly from the tool.