The world of embedded systems is multifaceted – from hardware and software to services and tools. The embedded world Exhibition & Conference brings the entire embedded community together once a year in Nuremberg and provides a unique overview of the state-of-the-art in this versatile industry. Last year, the conference hosted 952 exhibitors and 26,630+ visitors from all over the world. This years event, scheduled for April 9-11, is expected to be even larger.

Enabling Linux in Safety Applications (ELISA) Project will be at the event in the Collabora booth (Hall 4- booth 404) with a system demonstrator.

The ELISA System Demonstrator:

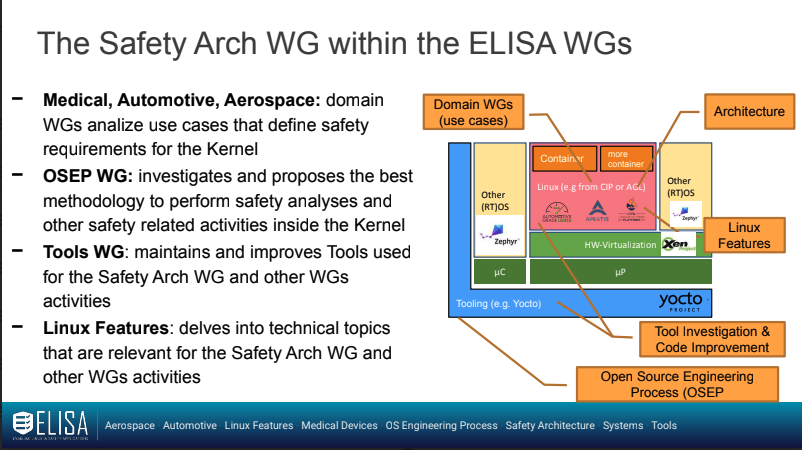

- Heterogenous example system, fully based on Open Source, consisting of Linux, Zephyr (RTOS), and Xen (Virtualization)

- Represents recent SW architectures found in industries like Automotive Software Defined Vehicles and Aerospace

- Focus on reproducibility as blueprint for future systems

- Running on Xilinx Ultrascale ZCU102 and on qemu

- GitHub documentation & Gitlab CI

- Various use cases documented like device pass through of SD card and NIC, para virtualization of network and different Linux guests