This blog previously ran on the Codethink website. Click here for more content like this.

Paul Albertella spoke at the ELISA November 2021 Workshop about how Codethink’s Deterministic Construction Service achieved ISO 26262 certification. In this article he explains the purpose of DCS and how it paves the way towards one of Codethink’s longer-term goals: establishing a viable approach to safety certification for Linux-based operating systems. Read more or watch the video from the ELISA Workshop below.

Background

Deterministic Construction Service (DCS) is Codethink’s design pattern for constructing critical software components. It defines a controlled process, based on an automated continuous integration (CI) workflow, for constructing and managing changes to software components, and to the tools used to build and verify them. A reference implementation of this design pattern was recently assessed by Exida and qualified using the ISO 26262 safety standard for use with automotive safety applications up to ASIL D.

DCS was made possible by many years of work on construction and integration tooling at Codethink, and builds directly on the previous efforts of open-source projects such as Baserock, BuildStream, Freedesktop SDK and Reproducible Builds. These projects have helped to establish and refine both the principles that inform DCS and the techniques used in its implementation.

As a tool, DCS is an important foundation for building a safety-certifiable Linux-based OS, but in creating and certifying it, Codethink had another goal: to validate the safety approach that we have been developing in collaboration with Exida and ELISA. This approach is called RAFIA, which is an acronym of Risk Analysis, Fault Injection and Automation); it was introduced in a previous article and further discussed in a second article.

Goals and principles

The goals of DCS are:

- To construct software in such a way that it is consistently reproducible

- To verify this property for a given set of inputs, for a given instantiation of DCS

- To make use of this property to inform verification and impact analysis

- To automate all of this as part of a continuous integration (CI) workflow

Reproducible, in this context, means that the outputs of the construction process (a binary fileset) for a given set of inputs (source code, dependencies, build instructions, etc) for the target software (the components that we are constructing and verifying) must be demonstrably identical. That is to say, re-running the DCS process without explicitly changing any of the inputs, must produce exactly the same set of binary outputs every time.

Inputs, in this context, means everything that is required to construct and verify the software, which includes:

- the target software and its dependencies,

- the actions required to construct and verify these,

- the tools used to perform these actions, and

- the execution environments for these actions

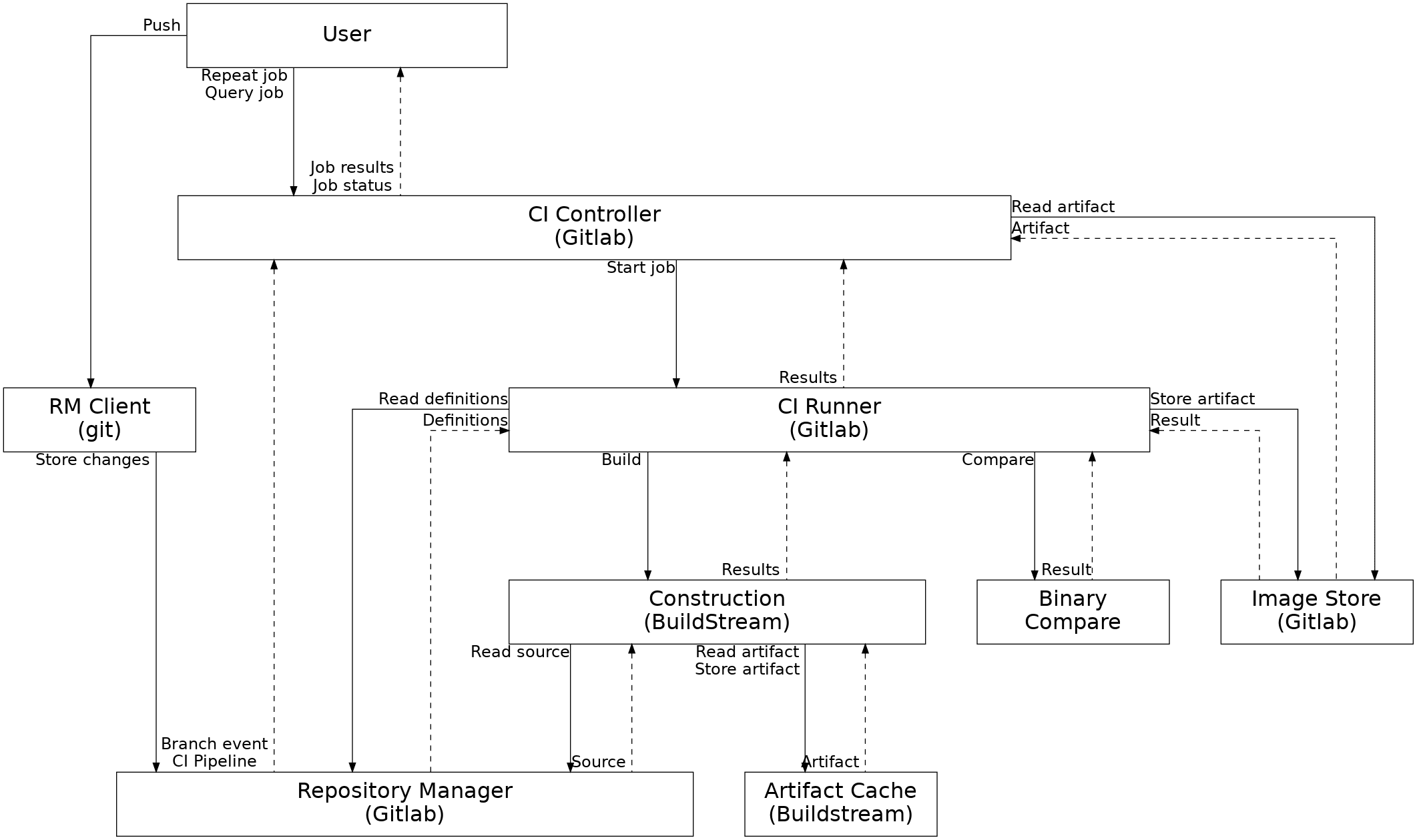

An instantiation of DCS is an implementation of the design pattern using a specific set of tools, configuration and infrastructure. The reference implementation, for example, is based on Codethink’s managed Gitlab service and its associated servers, resources (e.g. CI runners), and configurations (e.g. access control), together with a set of hosted git repositories. These repositories contain the component tools used to realise DCS, the build and test inputs for these – including safety-related criteria and tests for the DCS design pattern – and the automation scripts that implement the overall service logic.

In order to have reproducible outputs, we must have clearly defined and consistent inputs. This means having fine-grained change and revision control over the corresponding files and their organisation into components, configurations, target systems, etc.

It is essential to track all inputs, including (but not limited to): the source code of the target software; any other build-time dependencies required to construct it; any run-time dependencies required to verify it; the configuration, calibration or test data used to inform or verify its behaviour; the tools used to perform construction and verification actions; the execution environments within which these actions are performed; the criteria that are used to evaluate verification actions; and instructions detailing the actions required to provide, build or verify all of these as part of an automated workflow.

We also need fine-grained control over our construction processes, which means that build actions must not only be consistently performed, but must be executed in a controlled environment to avoid the introduction of unspecified or unplanned inputs into the build process.

Purpose

DCS verifies that we have control over our construction process and all of its inputs by comparing the binary outputs of two completed construction pipelines (automated executions of the specified construction steps and actions). If the results are identical, then the inputs and build actions may be considered under control. If the results differ, then the cause of the difference must be investigated, to determine whether an unspecified or uncontrolled input is involved in the construction process.

Once we have control over our process and inputs, we can use the same principle to inform impact analysis for the constructed software. If a change to one of the inputs has no effect on the output binaries, then we can be confident that there will be no impact on the software’s behaviour or properties, which may avoid unnecessary re-testing. Similarly, if we were expecting a change to have an effect (e.g. to fix a bug), then we can know that this will not be the case without having to re-test it.

These ‘no change’ cases may seem insignificant at first sight – after all, why would we want to make a change that has no effect? – but when maintaining complex software systems, the practice of regularly and systematically applying atomic changes can be invaluable. Not all changes to an input will affect the output for a given construction, because the source code may have conditional compilation sections, which are not used for a specific build. By atomically applying individual changes over time, instead of applying a large change set in one go, we are able to determine when a specific change does have an effect and use this to guide our impact analysis.

This becomes even more valuable if we are using artifact caching as part of a construction process. By storing the artifacts (binary outputs and intermediate objects) produced by previous build actions in a shared cache, we can dramatically reduce build times for large software components. But how can we be confident that these cached artifacts directly relate to our input files? Different caching solutions approach this in different ways, but by periodically rebuilding from source (e.g. with a weekend rebuild pipeline), and comparing the result with a build that uses cached artifacts, we can independently verify the integrity of our cache, regardless of the cache indexing strategy.

We can use the same principles to show that the property of reproducibility is independent of the specific instantiation of DCS – including host hardware, operating systems, compilers and other tools. This allows us to confirm that a new instantiation of DCS meets the design pattern requirements, by comparing the binary outputs for a reference build.

This approach can be extended to verify that a change to a tool used as part of the DCS instantiation, or as an input to the construction process, has no effect on the output. For tools that we expect to have no direct impact on the outputs, this is a confirmation of our analysis. For tools that we do expect to affect outputs (e.g. compilers), this is a confirmation that an upgraded tool has not introduced an unexpected change – or if a change is detected, to drive our analysis of its potential impact.

Using RAFIA for certification

Codethink’s DCS reference implementation was certified by Exida based on the ISO 26262 tool qualification requirements. This was achieved using safety argumentation that was developed using RAFIA, and a safety lifecycle built around the DCS controlled process itself.

We used STPA to analyse the risks associated with the specific purpose of DCS and to define safety requirements in the form of constraints. These were then used to derive tests to verify that a given DCS instantiation satisfies the applicable requirements, or to specify process requirements that must be applied by the user of DCS and verified as part of a safety assessment. We also identified loss scenarios that might lead to violation of constraints and developed fault injection tests to show that our mitigations were effective.

By applying this controlled process to all inputs to the certification assessment, we were able to demonstrate that we had addressed all of the applicable requirements in the standard, and provide evidence to support this. Controlled inputs included documentation and requirements as well as the build inputs. This included the documented STPA results, for which we developed a YAML data structure and validation tools, which have been shared in a new open source repository. For the reference implementation, all inputs were stored in git repositories managed by Gitlab.

This allowed us to map evidence to individual certification criteria based on the applicable safety standard (ISO 26262 for DCS). For each of these, we documented how the criteria were satisfied and provided links to documents, source code, tests or CI-generated output that provided supporting evidence.

By tracking all certification criteria, assertions, and evidence in the same manner as the software, all potential changes could be managed by the same CI-driven change control process. We could use CI to verify that supporting evidence links are valid and up-to-date, and trace requirements to tests, and to test results. We were also able to produce human-readable reports for safety assessors directly from the stored and generated evidence for a given release.

Role in safety and future work

As we have seen, DCS allows us to:

- Verify that we have control of all of our inputs, including dependencies

- Avoid retesting or re-validating unchanged binaries

- Identify and investigate differences when changes are detected

- Verify that our process is isolated from environmental disturbances

- Show that tool upgrades do not impact previously validated binaries

But how can DCS contribute to a broader safety process? And how will this help us to certify a Linux-based OS?

Safety standards such as ISO 26262 identify the key engineering processes that are expected to be used in the development of safety-relevant software, as well as organisational processes and controls (e.g. quality and safety management) that are required to ensure that the engineering processes are correctly and consistently followed. However, the standards provide only limited guidance on implementing such processes, as every organisation is expected to have its own particular approach and tools.

A deterministic construction process provides a foundation for many of these engineering processes, as as well as a way to monitor and enforce the required organisational controls. The DCS design pattern defines a consistent, automated and verifiable foundation for implementing a safety lifecycle for large-scale and complex software components. It was developed with Linux and open source software in mind, but the principles can be applied to any software, and many aspects of the RAFIA process can be applied to hardware components as well.

DCS and RAFIA enable software components and their associated documentation, as well as the required engineering and organisational process criteria and automation tools, to be managed and maintained in close alignment with the software development process. They also support key processes as follows:

Change Management and Configuration Management are, as we have seen, fundamental parts of the DCS design pattern, and also key topics in safety standards. DCS allows us to verify that we have control over all changes to our software and its configurations. This can be especially important when components or component dependencies are provided by a supply chain.

Verification of the software (e.g. through testing and static analysis) is required to confirm that it satisfies both its functional requirements and its safety requirements. DCS gives us control over all of the inputs to verification actions, as well as the tools and execution environments that are used to perform them. Using it as part of RAFIA also allows us identify and specify safety requirements for components and tools, and to manage these requirements in close coordination with the software.

Validation of the software as part of its target system is required to confirm that it fulfils its intended purpose in the system, including its role in fulfilling the system’s safety goals. This may require the software to be constructed for one or more system configurations (CPU architecture, hardware configurations, etc) and deployed to a specific test environment (e.g. a test rig). DCS provides a way to manage this, and RAFIA provides a way to validate safety mitigations at the system level, by defining fault injection cases for software components.

Impact analysis is required to determine whether a change to software may impact the target system’s safety goals. DCS can be used to drive this process for software components, by identifying when changes actually have an impact on the deployed binaries, and helping to identify the specific change that resulted in this impact.

Tool qualification is used to provide confidence in the use of software tools. As demonstrated by the DCS reference implementation, RAFIA can provide a basis for qualifying and validating open-source toolchains, and DCS can be used to control the specific source and configuration of tools that are used. DCS can also help to classify new tools by determining their impact on the constructed output, and to validate tool upgrades by determining their impact on a known and verified previous build.

On this basis, we believe that DCS represents a solid foundation for defining and constructing a Linux-based OS for a safety-related use case. Furthermore, the qualification of DCS demonstrates that the RAFIA approach can be used to provide the required safety argumentation and evidence to achieve a formal safety certification for tools based on open source software; we are confident that this can be extended to support the same goal for a safety-related system.